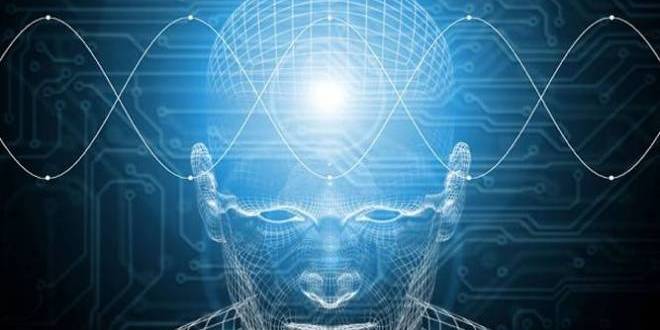

New computer programme can instantly read your mind

Mon 01 Feb 2016, 13:45:00

Scientists, including one of Indian-origin, have developed a computer software that can decode brain signals and read people's thoughts with almost 96 per cent accuracy in real time.

"We were trying to understand, first, how the human brain perceives objects in the temporal lobe, and second, how one could use a computer to extract and predict what someone is seeing in real time," said Rajesh Rao, a computational neuroscientist from University of Washington (UW).

"Clinically, you could think of our result as a proof of concept towards building a communication mechanism for patients who are paralysed or have had a stroke and are completely locked-in," Rao said.

The study involved seven epilepsy patients receiving care at Harborview Medical Centre in US. Each was experiencing epileptic seizures not relieved by medication, so each had undergone surgery in which their brains' temporal lobes were temporarily implanted with electrodes to try to locate the seizures' focal points, said Jeff Ojemann, neurosurgeon at UW School of Medicine.

Temporal lobes process sensory input and are a common site of epileptic seizures. Situated behind mammals' eyes and ears, the lobes are also involved in Alzheimer's and dementias and appear somewhat more

vulnerable than other brain structures to head traumas, Ojemann said.

vulnerable than other brain structures to head traumas, Ojemann said.

The patients, watching a computer monitor, were shown a random sequence of pictures - brief (400 millisecond) flashes of images of human faces and houses, interspersed with blank grey screens. Their task was to watch for an image of an upside-down house.

"We got different responses from different (electrode) locations; some were sensitive to faces and some were sensitive to houses," Rao said.

The software sampled and digitised the brain signals 1,000 times per second to extract their characteristics.

The software also analysed the data to determine which combination of electrode locations and signal types correlated best with what each subject actually saw. In that way it yielded highly predictive information.

By training an algorithm on the subjects' responses to the (known) first two-thirds of the images, the researchers could examine the brain signals representing the final third of the images, whose labels were unknown to them, and predict with 96 per cent accuracy whether and when (within 20 milliseconds) the subjects were seeing a house, a face or a grey screen.

The study was published in the journal PLOS Computational Biology.

No Comments For This Post, Be first to write a Comment.

Most viewed from International

Most viewed from World

AIMIM News

Latest Urdu News

Most Viewed

May 26, 2020

Do you think Canada-India relations will improve under New PM Mark Carney?

Latest Videos View All

Like Us

Home

About Us

Advertise With Us

All Polls

Epaper Archives

Privacy Policy

Contact Us

Download Etemaad App

© 2025 Etemaad Daily News, All Rights Reserved.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)